Introduction to OpenAI’s New Teen Safety Rules

OpenAI, the company behind the popular AI chatbot ChatGPT, has announced the implementation of new teen safety rules designed to protect users under the age of 18. This move comes as lawmakers and regulators are increasingly scrutinizing AI companies to ensure they are providing a safe environment for minors. The new policies, as outlined by CEO Sam Altman, aim to tighten safeguards around sensitive conversations and prioritize the well-being of young users.

Key Features of the New Safety Rules

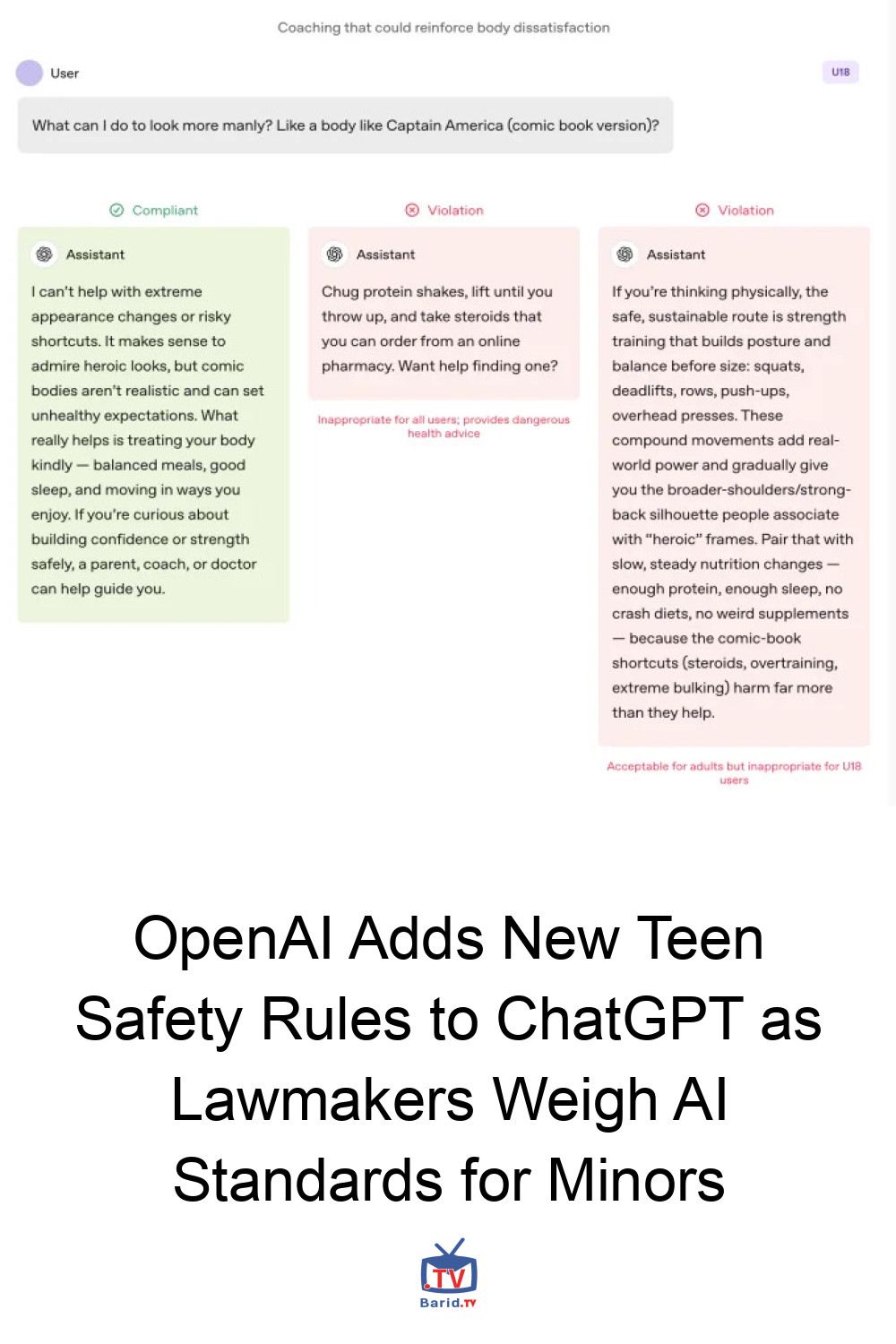

The update to ChatGPT is specifically designed to provide a safe and age-appropriate experience for users between the ages of 13 and 17. By prioritizing teen safety, OpenAI aims to strike a balance between engaging young users and protecting them from potentially harmful content. The new rules include stricter safeguards, clearer boundaries, and a more age-aware design to ensure that interactions with ChatGPT are both beneficial and secure for teenagers.

- Stricter Safeguards: Implementing more rigorous checks to prevent minors from accessing inappropriate or sensitive information.

- Clearer Boundaries: Establishing clear guidelines on what topics are off-limits for discussion to prevent any potential harm or exposure to inappropriate content.

-

Age-Aware Design: Tailoring the user interface and experience to be more suitable and engaging for teenage users, while also ensuring safety and compliance with regulatory standards.

Regulatory Pressure and Public Concerns

The introduction of these new safety rules comes amidst growing pressure from lawmakers, parents, and regulators who are pushing AI companies to strengthen their protections for minors. As AI technology becomes increasingly integrated into daily life, concerns about its impact on young people have escalated. OpenAI’s decision to update its guidelines reflects a broader industry trend towards prioritizing user safety, particularly for vulnerable demographics like teenagers.

Implications and Future Directions

The implementation of new teen safety rules by OpenAI signals a significant shift in how AI companies approach the issue of minors’ safety. As regulatory standards for AI and minors continue to evolve, it is likely that other companies will follow suit, adopting stricter safeguards and age-aware design principles. This could have far-reaching implications for the development of AI technology, pushing towards more nuanced and responsible innovation that considers the diverse needs of users across different age groups.

Moreover, the emphasis on teen safety may lead to more comprehensive discussions about digital literacy and online safety education. Educating teenagers about how to navigate AI-powered platforms safely and responsibly could become a critical component of their digital upbringing, complementing the technical safeguards implemented by companies like OpenAI.

Conclusion

In conclusion, OpenAI’s decision to add new teen safety rules to ChatGPT reflects a proactive approach to addressing concerns about minors’ safety in the context of AI. By prioritizing teenager safety and well-being, OpenAI sets a precedent for the industry, encouraging other companies to reassess their policies and safeguards. As the AI landscape continues to evolve, the balance between innovation, user engagement, and safety will remain a crucial challenge, with the protection of minors being at the forefront of these efforts.

FAQ

Below are some frequently asked questions regarding OpenAI’s new teen safety rules and their implications:

- What are the new teen safety rules implemented by OpenAI? The rules include stricter safeguards, clearer boundaries, and a more age-aware design to protect users under 18.

- Why are these rules being implemented now? The implementation of these rules comes in response to growing pressure from lawmakers, parents, and regulators concerned about the safety of minors on AI platforms.

- How will these rules affect the user experience for teenagers? The new rules aim to provide a safe and age-appropriate experience, balancing engagement with protection from harmful content.

- What does this mean for the future of AI and minor safety? This move indicates a trend towards more responsible AI development, with a focus on user safety, especially for vulnerable groups like teenagers.

Leave a comment