In an era increasingly defined by technological advancement, the deployment of sophisticated tools by government agencies often comes with promises of enhanced efficiency and security. However, a recent investigation by WIRED has cast a stark shadow over one such tool: Mobile Fortify, a face-recognition application now in use by United States immigration agents across the nation. Far from being a reliable identifier, this app, it turns out, was never designed for definitive identification and was rolled out with a troubling disregard for established privacy protocols.

The Illusion of Verification: A Flawed Tool on the Streets

Launched by the Department of Homeland Security (DHS) in the spring of 2025, Mobile Fortify was ostensibly introduced to “determine or verify” the identities of individuals encountered by DHS officers. Its deployment was explicitly linked to a 2017 executive order aimed at a “total and efficient” crackdown on undocumented immigrants. Yet, despite DHS’s consistent portrayal of Mobile Fortify as a verification tool, its fundamental design and inherent limitations mean it cannot, in fact, provide positive identification.

More Leads, Less Certainty

“Every manufacturer of this technology, every police department with a policy makes very clear that face recognition technology is not capable of providing a positive identification, that it makes mistakes, and that it’s only for generating leads,” explains Nathan Wessler, deputy director of the American Civil Liberties Union’s Speech, Privacy, and Technology Project. This critical distinction—between generating leads and verifying identity—is often lost in the rhetoric surrounding such technologies, with profound implications for civil liberties.

Erosion of Privacy Protections and Oversight

The rushed approval and deployment of Mobile Fortify in May of last year were facilitated by a concerning internal shift within DHS. Records reveal a deliberate dismantling of centralized privacy reviews and the quiet removal of department-wide limits on facial recognition technology. These changes were reportedly overseen by a former Heritage Foundation lawyer and Project 2025 contributor, now holding a senior privacy role within DHS, raising serious questions about the agency’s commitment to privacy safeguards.

Despite repeated calls for transparency from oversight officials and nonprofit privacy watchdogs, DHS has remained tight-lipped about the specific methods and tools its agents employ. This lack of transparency only exacerbates concerns about accountability and potential misuse.

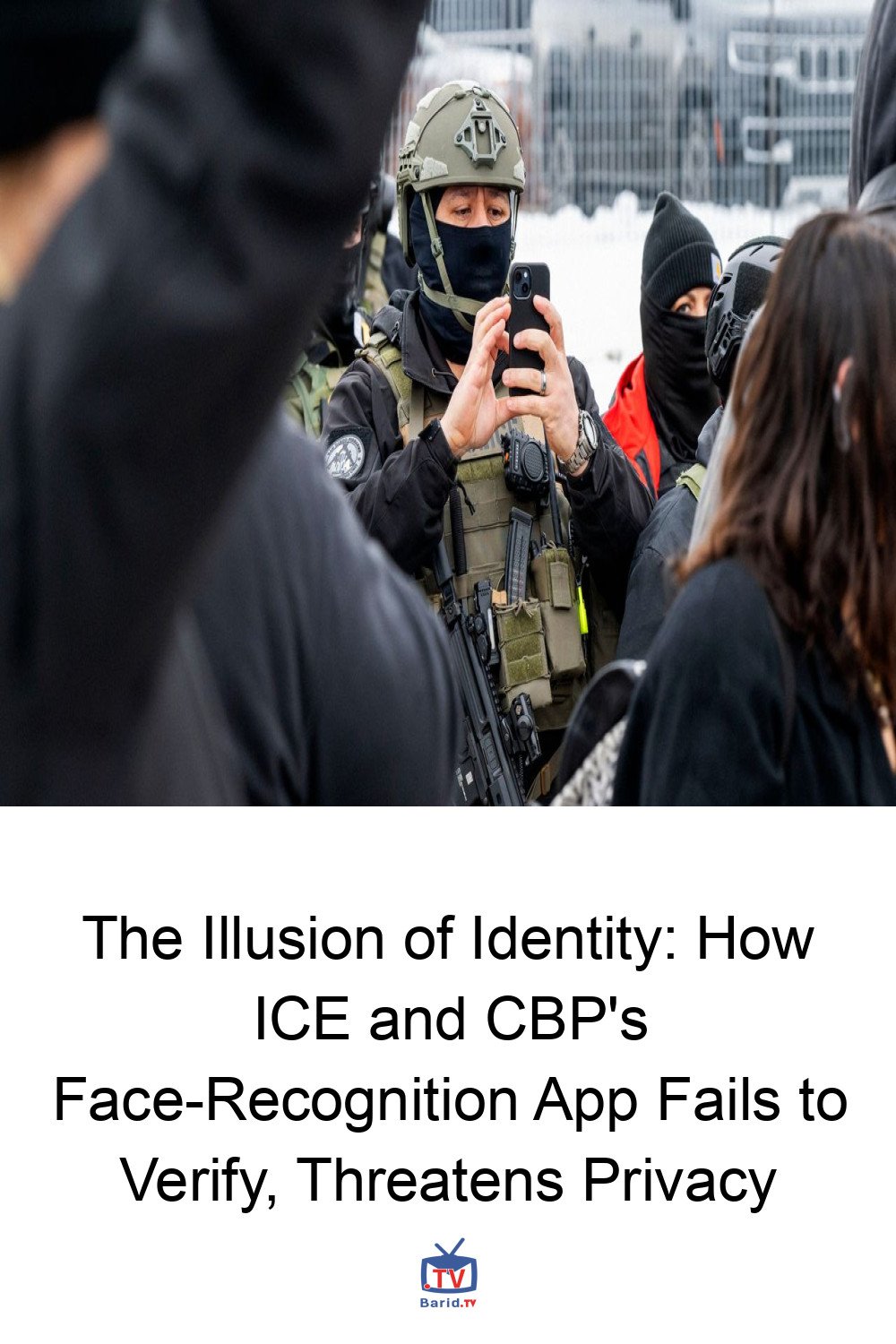

Real-World Misapplications and Disturbing Encounters

The consequences of deploying an unreliable and opaque facial recognition tool are already evident in the field. Mobile Fortify has been used to scan the faces not only of “targeted individuals” but also of US citizens and those merely observing or protesting enforcement activities. Reports detail federal agents informing citizens that their faces were being recorded and would be added to a database without consent.

Questionable Probable Cause

Even more troubling are accounts describing agents escalating encounters based on factors like accent, perceived ethnicity, or skin color, with face scanning then used as a subsequent step. This pattern suggests a broader shift in DHS enforcement towards low-level street encounters culminating in biometric capture, all under a veil of limited transparency.

The technology’s reach extends far beyond border regions, enabling DHS to generate nonconsensual “face prints” of individuals hundreds of miles inland, including, as DHS’s Privacy Office concedes, “US citizens or lawful permanent residents.” The true scope and functionality of Fortify are primarily illuminated through court filings and sworn agent testimony, rather than official disclosures.

Case Study: The Oregon Incident

A recent federal lawsuit by the State of Illinois and the City of Chicago revealed Mobile Fortify has been used “in the field over 100,000 times” since its launch. However, the app’s limitations were starkly illustrated in Oregon testimony last year.

An agent recounted using the app on a handcuffed woman in custody. Despite physically repositioning her, causing her to yelp in pain, the first scan returned a “maybe” match to a woman named Maria. When agents called out “Maria, Maria” and received no response, a second scan was performed, yielding a “possible” but uncertain result. Asked about probable cause, the agent cited the woman speaking Spanish, her association with apparent noncitizens, and the “possible match.” Crucially, the agent testified that the app provided no confidence score, leaving identification to subjective interpretation: “It’s just an image, your honor. You have to look at the eyes and the nose and the mouth and the lips.” This anecdote underscores the profound unreliability and potential for human bias in the app’s application.

Expert Warnings and the Path Forward

“Facial recognition can be wrong, and it has been wrong in the past,” warns Mario Trujillo, a senior staff attorney at the digital-rights nonprofit Electronic Frontier Foundation. “Here, the safeguards you’d expect—confidence scores, clear thresholds, multiple candidate photos—don’t appear to be there.” The fact that the app can produce multiple identities for the same person is not an error, but a known characteristic of the technology, yet it is being used for critical enforcement decisions.

The deployment of Mobile Fortify represents a troubling precedent: a powerful, yet flawed, surveillance technology introduced with minimal oversight and significant implications for privacy and civil liberties. As immigration enforcement increasingly relies on such tools, the urgent need for robust transparency, stringent safeguards, and public accountability becomes paramount to prevent further erosion of fundamental rights.

For more details, visit our website.

Source: Link

Leave a comment