Critical ‘DockerDash’ Flaw in Ask Gordon AI Exposed: Docker Images Could Lead to Code Execution

A significant security vulnerability, dubbed ‘DockerDash,’ has been uncovered and patched in Docker’s Ask Gordon AI assistant, a feature integrated into Docker Desktop and the Docker Command-Line Interface (CLI). This critical flaw allowed attackers to execute arbitrary code and exfiltrate sensitive data by embedding malicious instructions within Docker image metadata. Discovered by cybersecurity firm Noma Labs, the vulnerability was addressed by Docker with the release of version 4.50.0 in November 2025, highlighting a growing concern around AI supply chain risks.

Unpacking DockerDash: The Metadata Menace

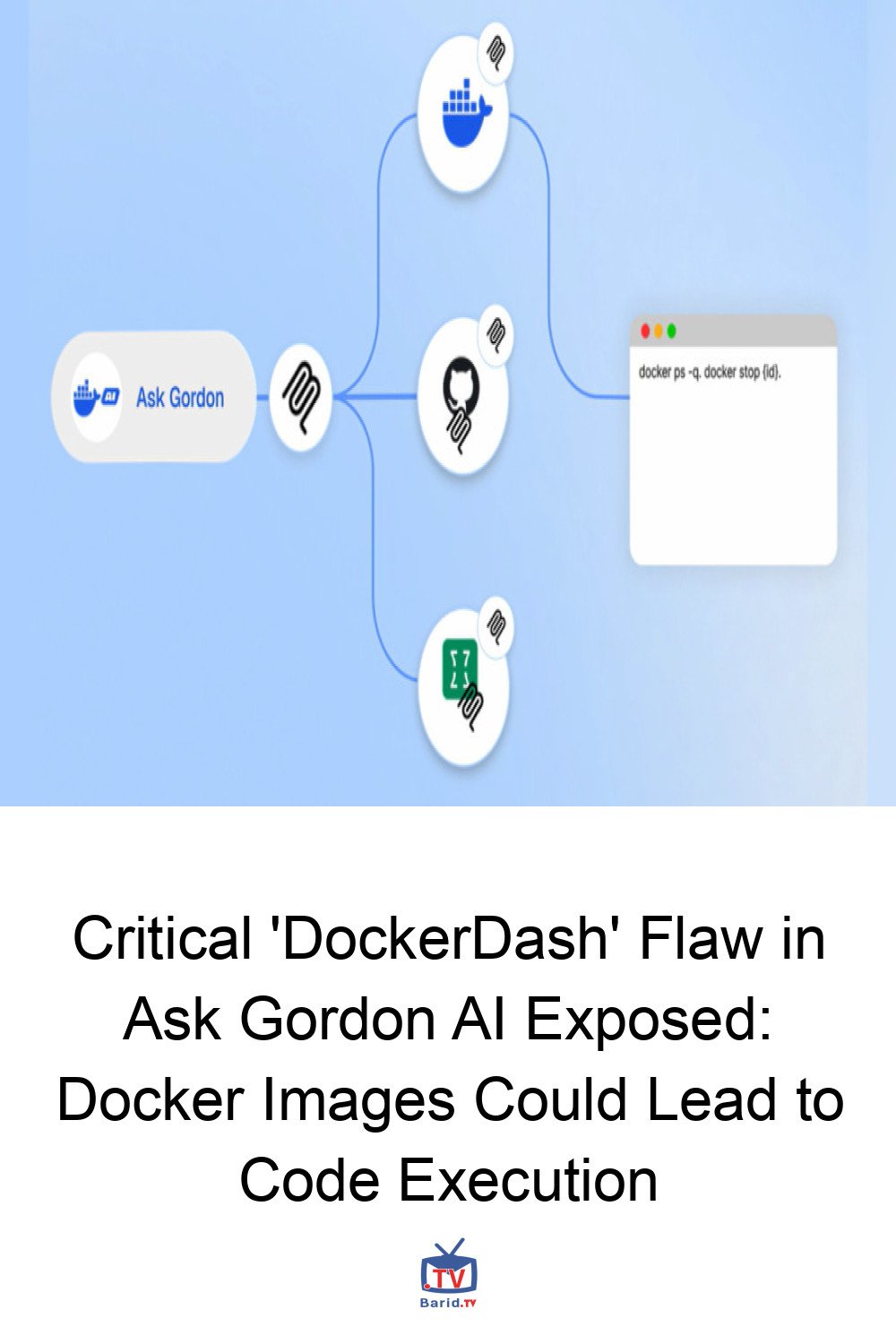

At its core, DockerDash exploits a fundamental trust issue: Ask Gordon AI’s inability to distinguish between benign informational metadata and weaponized executable commands. According to Sasi Levi, security research lead at Noma, the attack unfolds in a simple yet devastating three-stage process:

- A malicious metadata label is embedded within a Docker image.

- Gordon AI reads and interprets this malicious instruction.

- The instruction is forwarded to the Model Context Protocol (MCP) Gateway, which then executes it via MCP tools, all without any validation.

“Every stage happens with zero validation, taking advantage of current agents and MCP Gateway architecture,” Levi explained. This lack of scrutiny transforms seemingly innocuous metadata fields into potent vectors for injection, allowing attackers to bypass established security boundaries.

The Deceptive Simplicity of the Attack Chain

The implications of DockerDash are severe, ranging from critical remote code execution (RCE) for cloud and CLI systems to high-impact data exfiltration for desktop applications. The vulnerability is characterized as a case of Meta-Context Injection, where the MCP Gateway, acting as a bridge between the large language model (LLM) and the local environment, fails to differentiate between standard Docker labels and pre-authorized, runnable internal instructions.

In a hypothetical attack, a threat actor would:

- Craft a malicious Docker image with weaponized instructions embedded in Dockerfile LABEL fields.

- Publish this image to a repository.

- When a victim queries Ask Gordon AI about the image, Gordon reads all metadata, including the malicious labels, without proper differentiation.

- Ask Gordon forwards these parsed instructions to the MCP Gateway, which, treating it as a trusted request, invokes the specified MCP tools.

- The MCP tool executes the command with the victim’s Docker privileges, achieving code execution.

Beyond Code: Data Exfiltration Risks

Beyond code execution, DockerDash also presented a significant data exfiltration risk. This variant of the prompt injection flaw targeted Ask Gordon’s Docker Desktop implementation. By leveraging the assistant’s read-only permissions, attackers could use MCP tools to capture sensitive internal data about the victim’s environment. This could include details on installed tools, container configurations, Docker settings, mounted directories, and even network topology, providing a treasure trove of information for further exploitation.

A Broader AI Supply Chain Challenge

The discovery of DockerDash underscores a critical emerging threat: AI Supply Chain Risk. As Sasi Levi noted, “It proves that your trusted input sources can be used to hide malicious payloads that easily manipulate AI’s execution path.” This vulnerability highlights how the integration of AI models with local environments, particularly through intermediaries like the MCP Gateway, introduces new attack surfaces if contextual trust is not rigorously managed.

It’s also worth noting that Docker version 4.50.0 simultaneously addressed another prompt injection vulnerability, discovered by Pillar Security. This separate flaw could have allowed attackers to hijack the assistant and exfiltrate data by tampering with Docker Hub repository metadata.

Fortifying AI Defenses: The Path Forward

Mitigating this new class of attacks demands a paradigm shift towards zero-trust validation. Organizations must implement stringent checks on all contextual data provided to AI models, ensuring that no input, regardless of its source, is implicitly trusted as an executable command. The DockerDash vulnerability serves as a stark reminder that as AI becomes more integrated into our development tools, securing the AI supply chain is paramount to protecting our digital infrastructure.

For more details, visit our website.

Source: Link

Leave a comment