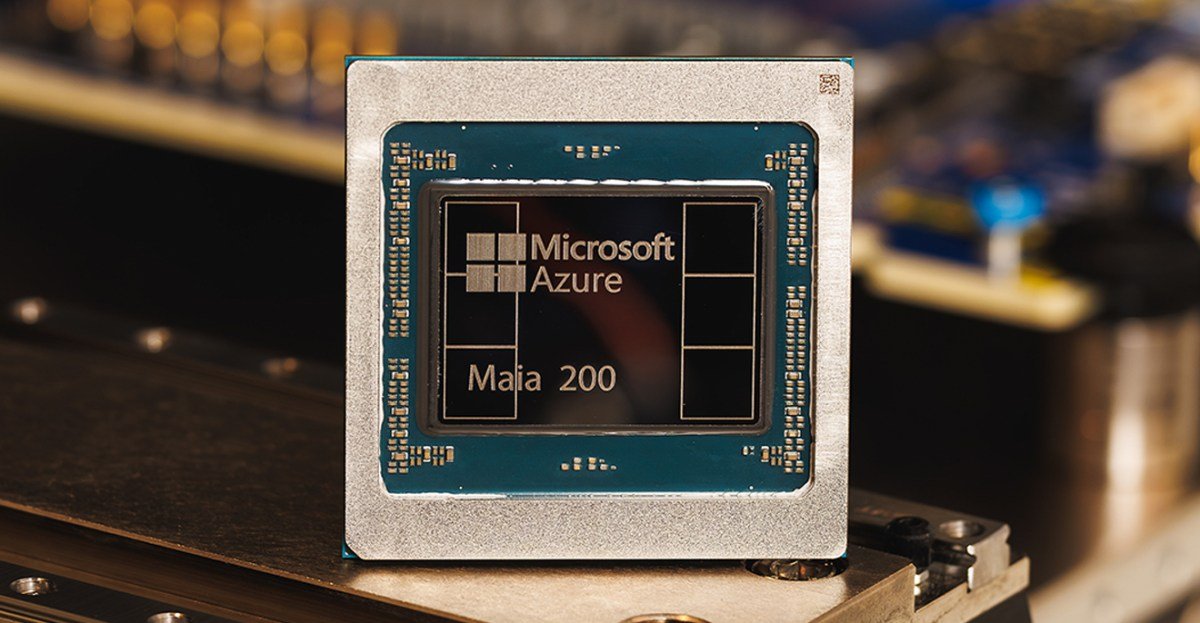

In a bold move that intensifies the burgeoning artificial intelligence hardware race, Microsoft has officially unveiled its latest in-house AI accelerator, the Maia 200. This powerful new chip is designed to tackle the most demanding AI workloads and is already beginning its rollout to Microsoft’s global data centers, signaling a direct challenge to industry rivals Amazon and Google.

Microsoft’s New AI Powerhouse: The Maia 200

Building upon the foundation of its predecessor, the Maia 100, the Maia 200 represents a significant leap forward in Microsoft’s commitment to proprietary AI silicon. Manufactured using TSMC’s advanced 3nm process, this cutting-edge chip boasts an astonishing over 100 billion transistors

. This immense computational capacity is specifically engineered to handle the colossal demands of large-scale AI models, ensuring both current and future AI innovations can be processed with unparalleled efficiency.

Unrivaled Performance Claims

Microsoft is not shy about the Maia 200’s capabilities, directly positioning it against the offerings from its closest competitors. According to the company, the Maia 200 AI accelerator delivers:

- 3 times the FP4 performance of Amazon’s third-generation Trainium chip.

- Superior FP8 performance compared to Google’s seventh-generation TPU.

Scott Guthrie, executive vice president of Microsoft’s Cloud and AI division, emphasized the chip’s future-proofing, stating, “Maia 200 can effortlessly run today’s largest models, with plenty of headroom for even bigger models in the future.” Furthermore, Guthrie highlighted its operational efficiency, noting, “Maia 200 is also the most efficient inference system Microsoft has ever deployed, with 30 percent better performance per dollar than the latest generation hardware in our fleet today.”

Strategic Deployment and Ecosystem Impact

The Maia 200 is slated for immediate strategic deployment across Microsoft’s vast AI ecosystem. It will be instrumental in hosting advanced models like OpenAI’s GPT-5.2, a testament to Microsoft’s deep partnership with OpenAI. Additionally, the chip will power critical services within Microsoft Foundry and enhance the capabilities of Microsoft 365 Copilot, bringing advanced AI features to enterprise users.

Beyond internal applications, Microsoft is fostering a broader AI community engagement. The company’s Superintelligence team will be among the first to leverage the Maia 200, and an early preview of the Maia 200 software development kit (SDK) is being extended to academics, developers, AI labs, and open-source model project contributors. This initiative aims to accelerate innovation and adoption across the AI landscape.

The Escalating AI Chip Arms Race

Microsoft’s assertive stance with the Maia 200 marks a shift from its earlier, more reserved approach with the Maia 100. This direct comparison signals an intensifying battle among tech giants to dominate the AI hardware market. While Microsoft flexes its new silicon, both Google and Amazon are actively developing their next-generation AI chips. Amazon, for instance, is collaborating with Nvidia to integrate its upcoming Trainium4 chip with NVLink 6 and Nvidia’s MGX rack architecture, indicating a multi-faceted approach to AI acceleration.

The initial deployment of Maia 200 chips has commenced in Microsoft’s Azure US Central data center region, with plans for further expansion into additional regions. This rollout underscores Microsoft’s ambition to solidify its position as a leader in cloud AI infrastructure, offering unparalleled performance and efficiency to its global clientele.

For more details, visit our website.

Source: Link

Leave a comment