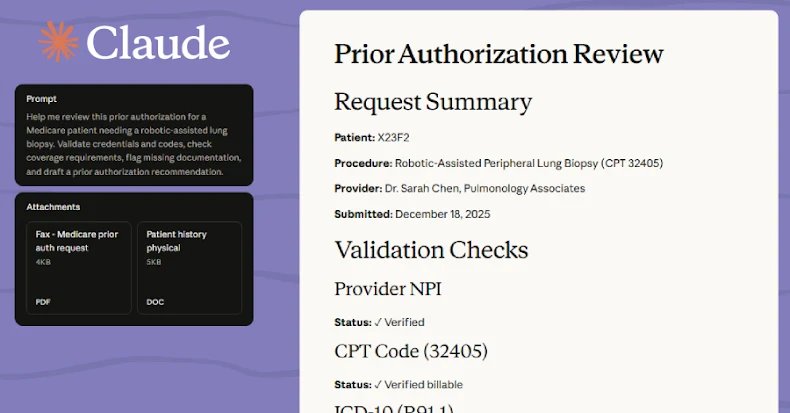

Anthropic Unveils Claude for Healthcare: A New Era of Personalized Health Insights

In a significant stride towards integrating artificial intelligence into personal health management, Anthropic has launched “Claude for Healthcare.” This innovative suite of features empowers users of its Claude platform to gain deeper, more accessible insights into their personal health information, marking a pivotal moment in the digital health revolution.

Empowering Patients with Secure, Understandable Health Data

U.S. subscribers to Claude Pro and Max plans can now securely connect Claude to their lab results and health records through integrations with HealthEx and Function. Further expanding accessibility, Apple Health and Android Health Connect integrations are slated to roll out shortly via Claude’s mobile applications. This secure connectivity unlocks a wealth of possibilities:

Summarizing Medical History:

Claude can distil complex medical records into concise, easy-to-understand summaries.- Explaining Test Results: Users can receive plain-language explanations of lab results, demystifying medical jargon.

- Detecting Health Patterns: By analyzing fitness and health metrics, Claude can identify trends and patterns, offering a holistic view of well-being.

- Preparing for Appointments: The AI can help users formulate pertinent questions for their doctors, fostering more productive consultations.

Anthropic emphasizes that the core aim is to enhance patient-doctor dialogues and keep individuals better informed about their health journey.

Privacy at the Forefront of AI Health

Understanding the sensitive nature of health data, Anthropic has engineered Claude for Healthcare with privacy as a foundational principle. Users retain explicit control over the information they choose to share, with options to disconnect or modify Claude’s permissions at any time. Crucially, Anthropic assures users that their health data will not be utilized to train its AI models, mirroring a similar commitment from competitors like OpenAI.

The Evolving Landscape of AI in Healthcare

Anthropic’s announcement follows closely on the heels of OpenAI’s unveiling of ChatGPT Health, which offers a dedicated experience for secure medical record connection, personalized responses, lab insights, and nutrition advice. This parallel development underscores a growing industry trend: major AI players are rapidly expanding into the healthcare sector, recognizing the immense potential for AI to transform how individuals interact with their health information.

Navigating the Challenges: Accuracy, Responsibility, and Professional Oversight

The rapid expansion of AI into sensitive domains like healthcare has not been without its challenges. Recent incidents, such as Google’s removal of inaccurate AI health summaries, highlight the critical need for caution and accuracy. Both Anthropic and OpenAI have been transparent about the inherent limitations of their AI offerings, unequivocally stating that they are not substitutes for professional healthcare advice.

Anthropic’s Acceptable Use Policy explicitly mandates that any AI-generated outputs in high-risk scenarios—including healthcare decisions, medical diagnosis, patient care, therapy, or mental health guidance—must be reviewed by a qualified professional before dissemination or finalization. Claude is designed to incorporate contextual disclaimers, acknowledge its uncertainties, and consistently direct users to healthcare professionals for personalized guidance, reinforcing a commitment to responsible AI deployment in health.

A Future of Informed Health Management

Claude for Healthcare represents a significant step towards a future where AI acts as an intelligent assistant, empowering individuals with better access to and understanding of their health data. While the technology promises unprecedented convenience and insight, Anthropic’s emphasis on privacy, user control, and the indispensable role of human medical professionals ensures a balanced and responsible approach to this transformative journey.

For more details, visit our website.

Source: Link