Nvidia’s Vera Rubin AI Superchip Enters ‘Full Production,’ Poised to Transform the AI Landscape

Las Vegas, NV

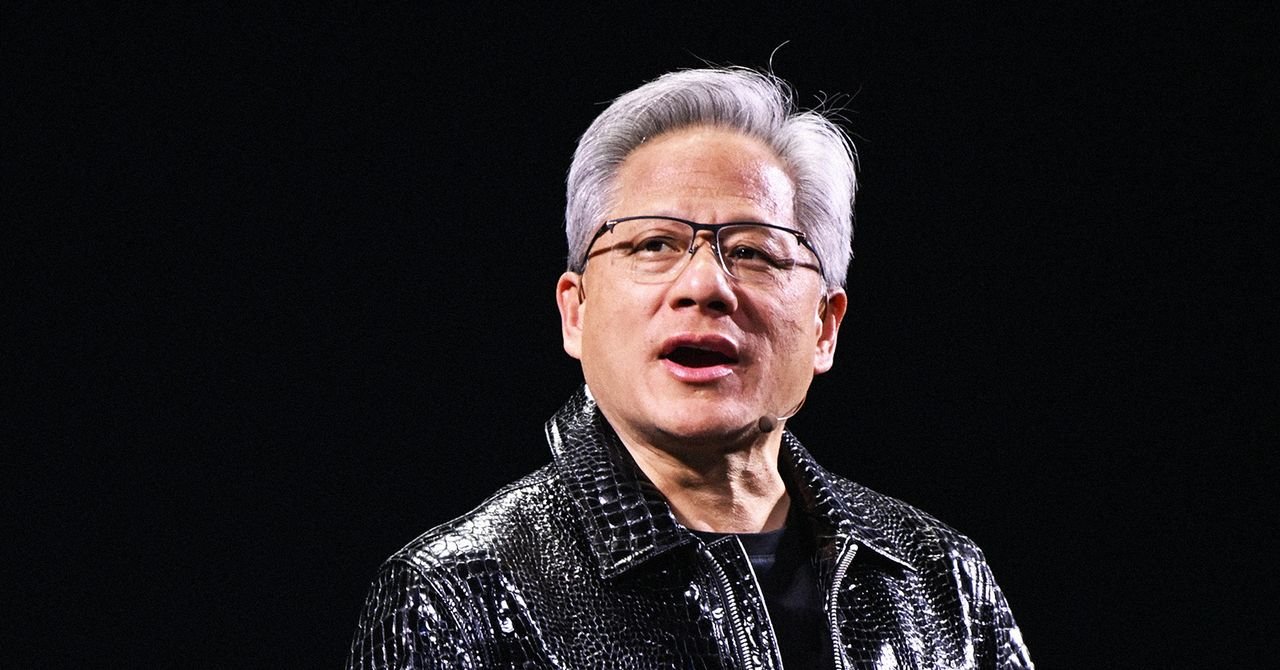

– In a significant declaration at the annual CES technology trade show, Nvidia CEO Jensen Huang announced that the company’s groundbreaking Vera Rubin AI superchip platform is now in ‘full production.’ This next-generation system, named after the pioneering American astronomer, is slated to begin reaching customers later this year, promising a seismic shift in the economics and capabilities of artificial intelligence.

The Dawn of Unprecedented Efficiency

The Vera Rubin platform is not merely an incremental upgrade; it represents a monumental leap in AI processing efficiency. Nvidia has revealed that Rubin can slash the operational costs of running AI models to approximately one-tenth of its current flagship, Blackwell. Furthermore, it can train complex large models using roughly one-fourth the number of chips Blackwell requires. These combined advancements are set to dramatically lower the barrier to entry for advanced AI systems, solidifying Nvidia’s indispensable position in the hardware ecosystem.

Strategic Partnerships and Early Adoption

Anticipation for Rubin is already high, with industry giants lining up for early access. Microsoft and CoreWeave are among the first partners poised to offer services powered by Rubin chips later this year. Microsoft’s ambitious AI data centers under construction in Georgia and Wisconsin are slated to integrate thousands of these revolutionary chips. Beyond cloud providers, Nvidia is also collaborating with Red Hat, a leader in open-source enterprise software, to expand the range of products compatible with the new Rubin system, ensuring broad industry adoption.

Engineering Marvel: Inside the Rubin Platform

At its core, the Vera Rubin platform is an engineering marvel, comprising six distinct chips. This includes the eponymous Rubin GPU and a Vera CPU, both meticulously crafted using Taiwan Semiconductor Manufacturing Company’s cutting-edge 3-nanometer fabrication process. They leverage the most advanced high-bandwidth memory (HBM) technology available, interconnected by Nvidia’s sixth-generation switching technologies. Huang proudly declared each component of this system ‘completely revolutionary and the best of its kind,’ underscoring the holistic innovation driving Rubin.

“Full Production”: A Strategic Announcement?

While the announcement of ‘full production’ signals strong progress, industry observers note the nuances of such a statement. Typically, the production of highly advanced chips like Rubin begins with low volumes for rigorous testing and validation before scaling up. Austin Lyons, an analyst at Creative Strategists, suggests this CES declaration serves as a crucial message to investors: ‘We’re on track.’ It effectively counters Wall Street rumors of Rubin GPU delays, reassuring the market that Nvidia has cleared critical development hurdles and remains confident in a full-scale production ramp-up in the latter half of 2026.

Navigating Market Dynamics and Competition

Nvidia’s journey hasn’t been without its challenges. The company faced delays with its Blackwell chips in 2024 due to a design flaw, though shipments were back on schedule by mid-2025. As the AI industry continues its explosive growth, demand for Nvidia’s GPUs remains insatiable, and Rubin is expected to command similar fervor. However, a growing trend sees some major players, such as OpenAI partnering with Broadcom for bespoke silicon, investing in custom chip designs. This strategy offers customers greater control over their hardware, posing a long-term competitive risk to Nvidia.

Despite these competitive pressures, today’s announcements underscore Nvidia’s strategic evolution. Lyons highlights that Nvidia is transcending its role as a mere GPU provider, transforming into a ‘full AI system architect.’ This encompasses compute, networking, memory hierarchy, storage, and software orchestration. Even as hyperscalers pour resources into custom silicon, Nvidia’s deeply integrated, end-to-end platform is becoming increasingly formidable and ‘harder to displace,’ cementing its pivotal role in shaping the future of artificial intelligence.

For more details, visit our website.

Source: Link